(Platform Spotlight) Configure HDFS Data Temperature Report (Parcel)

The HDFS Data Temperature report shows the data temperature across a cluster’s entire Hadoop Distributed File System (HDFS) space—how many hot, warm, and cold files are on the HDFS file system; the total sizes for hot, warm, and cold files; and what the configured storage policies are.

Using this data, you can optimize your storage use by changing the storage policies, particularly for files whose policies are not set to cold, but whose last access dates correlate with the cold file configuration.

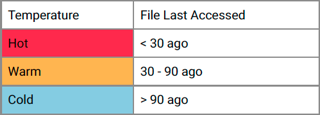

The definitions for hot, warm, and cold files for your Pepperdata implementation are included in the HDFS Data Temperature Report for your clusters. The image shows the default definitions, but yours might be different. Be sure to check with your System Administrator.

By default, the HDFS Data Temperature report runs on the standby NameNode. If failover occurs, the report stops running on the former standby NameNode, and starts running on the new standby NameNode. You can override these defaults, and configure the report to run on a different (specific) host instead of the NameNode hosts.

By default, Pepperdata reads the FsImage files once during a scheduled read window of 00:00–23:00 on Saturdays. You can override the default frequency of reads and/or the scheduled read window by changing the Pepperdata configuration.

Before you can access the HDFS Data Temperature report from the Pepperdata dashboard, you must configure your Pepperdata installation to collect the necessary metrics and to upload those metrics to the Pepperdata backend data storage.

On This Page

Prerequisites

Before you begin configuring your cluster for the HDFS Data Temperature report, ensure that your system meets the required prerequisites.

-

The Pepperdata PepAgent must be installed on every host that you want monitored for HDFS data temperature.

-

The monitored hosts must be configured for Hadoop, as required by the Hadoop Distributed File System (HDFS).

-

On the Pepperdata backend, the HDFS Data Temperature report must be enabled for your cluster. To request or confirm that the report is enabled, contact Pepperdata Support.

-

The host(s) on which the report is to run must be HDFS Gateway nodes.

-

On the host(s) where the report is run, the

PD_USERmust be therootuser, thehdfsuser, or a user with permission to read from the directory that holds the FsImage files (which you’ll specify by using thepepperdata.agent.genericJsonFetch.hdfsTiering.namenodeFsimageDirproperty) and from the Hadoop configuration directory that contains thehdfs-site.xmlandcore-site.xmlfiles (which you’ll specify by using thePD_HDFS_TIERING_CONF_DIRenvironment variable). -

(Kerberized clusters on CDH and CDP Private Cloud Base) Be sure that you selected Enable Access to Kerberized Cluster Components during the installation process (Task 2: Add Pepperdata Service to Cloudera Manager). (For CDP Public Cloud, Pepperdata automatically enables this for Kerberized clusters.)

This ensures that the PD_AGENT_PRINCIPAL and PD_AGENT_KEYTAB_LOCATION environment variables are correctly assigned by Pepperdata.

- The

PD_AGENT_PRINCIPALis used only for authentication (not authorization). - It does not need HDFS access.

-

It needs to be set only on the host(s) where the report is to run.

- Access times for HDFS must be enabled—a non-zero value for the

dfs.namenode.accesstime.precisionproperty.

Procedure

-

In Cloudera Manager, create a PepAgent NameNode Group role group in the Pepperdata configuration for the HDFS NameNode hosts in the cluster:

- Group Name:

PepAgent NameNode Group - Role Type:

PepAgent - Copy From:

PepAgent Default Group

- Group Name:

-

Move the HDFS NameNode hosts from the PepAgent Default Group to the PepAgent NameNode Group that you created.

-

Add the reporting parameters to the Pepperdata PepAgent configuration.

-

Enable the HDFS Tiering Fetcher (to fetch HDFS metadata).

In Cloudera Manager, locate the Enable HDFS Tiering Fetcher parameter, and select it for the PepAgent Default Group.

-

(Optional; default=

100000[100K]) Change the number of inodes—HDFS representations of files and directories—to read during every 15-second interval.The larger the value, the faster Pepperdata reads the inodes, and the more memory is needed to process the data (which is configured by the Java Heap Size of PepAgent property, below). The default value typically provides a good balance.

To change this, locate the HDFS Tiering Max inodes parameter, and change its value.

-

(Optional; default=

/dfs/nn) Change the location of the FsImage files—metadata about the file system namespace, including the mapping of blocks to files and file system properties.-

Although the FsImage files are located in a

currentsubdirectory of the location specified by thedfs.namenode.name.dirproperty in thehdfs-site.xmlconfiguration file, do not enter the/currentpart of the file path. Pepperdata automatically appends/currentto the path that you enter. -

If your

dfs.namenode.name.dirproperty value is a comma-separated list of multiple locations, you can choose any of the locations.

To change this, locate the HDFS Tiering NameNode FsImage Directory parameter, and change it to your location.

-

-

(Optional; default=5 minutes) Change how frequently the Pepperdata metrics data file rolls over to a newly opened file. Together with the number of inodes read during every 15–second interval (which is configured by the HDFS Tiering Max inodes property, above), this controls the number of entries that Pepperdata processes from one file.

To change this, locate the JSON Fetch Rolling Log Interval parameter, and change its value.

-

-

Configure the HDFS service dependency.

Locate the HDFS Service parameter, and select the cluster’s HDFS service.

-

(Optional; default=

128m) Change the amount of memory to provide for PepAgent reporting’s processing of the inodes, from128mto2g(inclusive).The greater the number of inodes read during every 15-second interval (which is configured by the HDFS Tiering Max inodes property, above), the more memory is required.

To change this, locate the Java Heap Size of PepAgent parameter, and change its value.

-

(To run the report on a non-NameNode host) Configure where the HDFS Data Temperature Report is to run.

If you are running the report on the NameNode hosts, skip this step.Use Cloudera Manager to add the following snippet to the Pepperdata > Configuration > PepAgent > PepAgent Environment Advanced Configuration Snippet (Safety Valve) template.

Be sure to substitute your fully-qualified, canonical hostname for the

YOUR.CANONICAL.HOSTNAMEplaceholder in the following code snippet; for example,ip-192-168-1-1.internal.PD_HDFS_TIERING_RUN_ON_HOST=YOUR.CANONICAL.HOSTNAME -

(To override the default scheduled read window for FsImage files and/or frequency of reads during the read window) Add your scheduled read window for FsImage files and/or your frequency of reads to the Pepperdata configuration.

If the default scheduled read window and interval for FsImages is okay (once during the window of 00:00–23:00 on Saturdays), you do not need to add anything to the Pepperdata configuration, and you should skip this step.Navigate to the Pepperdata > Configuration > PepAgent > PepAgent Advanced Configuration Snippet (Safety Valve) for conf/pepperdata-site.xml template, and add the configuration snippet to the PepAgent NameNode Group as an XML block.

-

Be sure to substitute your cron schedule and read frequency for the

your-cron-schedule(in Quartz Cron format), andyour-read-frequency(in seconds) placeholders. -

We recommend that you use as long a read window as possible to ensure that even if the PepAgent is restarted or there’s been a NameNode failover, the PepAgent can still begin the read during the read window.

As well, given the nature of the data—cold files have not been accessed in more than 90 days, and even warm files have been accessed 30–90 days ago—by definition the numbers of hot, warm, and cold files change very infrequently. So there is no advantage to constantly reading “newer” data.

Tip: To generate or validate your Quartz Cron expression, use the Cron Expression Generator & Explainer - Quartz . For example, if you want to change the read day from Saturdays to Tuesdays, the cron schedule would be "* * 0-23 ? * TUE". -

We recommend that the read interval correspond to the length of the read window, so that only a single read activity is performed during the window; for example, if the window is one day, set the interval to

86400(seconds). The FsImage files can be very large (upwards of 50 GB), and storing multiple files—which are ignored because the report generation uses only the most recent file—wastes storage resources and imposes large loads on the NameNode.

<property> <name>pepperdata.agent.genericJsonFetch.hdfsTiering.cronSchedule</name> <value>your-cron-schedule</value> </property> <property> <name>pepperdata.agent.genericJsonFetch.hdfsTiering.readIntervalSecs</name> <value>your-read-frequency</value> </property> -

-

Restart the Pepperdata services.

In Cloudera Manager, select the Restart action for the PepAgent and PepCollector services.